Problem

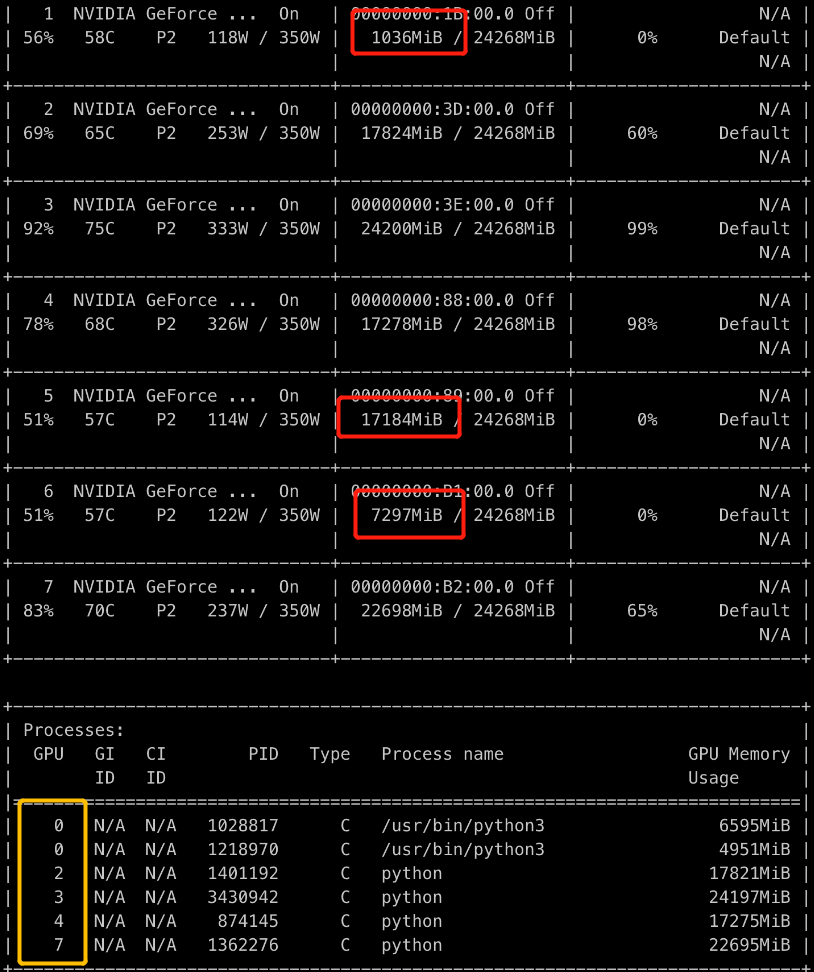

when typing nvidia-smi, we find there is GPU memory occupied (See Red Boxes), but we cannot see any relevant process on that GPU (See Orange Boxes).

Possible Answer

This can be caused by torch.distributed and other multi-processing CUDA programs. When the main process terminated, the background process still alive, not killed.

- To figure which processes used the GPU, we can use the following command:

1 | fuser -v /dev/nvidia<id> |

This will list all of the processes that use GPU. Note that if this is executed from a normal user, then only the user’s processes displayed. If this is executed from root, then all user’s relevant processes will be displayed.

- Then use the following command to kill the process shown above.

1 | kill -9 [PID] |

That will kill the process on the GPU. After killing the processes, you will find the GPU memory is freed. If still occupied, this may be caused by other users. You need to ask other users/administrators to kill it manually.